Long gone are the days when you would spend hours on Google just to find the right answer.

Today, we’re living in the era of artificial intelligence.

Machine learning is now working quietly in the background, giving us the best answers to whatever we search for.

Whether you’re looking for a new pair of shoes, planning to book a hotel room, or searching for a gift for your wife, Answer Engine Optimization (AEO) helps you find almost anything.

Based on your conversations, your AI model already understands what you’re interested in. So the response you get after asking a question feels more relevant and tailored to you.

People are no longer only “Googling it.”

They’re asking OpenAI’s ChatGPT, Gemini, Claude, Perplexity, and other AI engines for answers. And the best part? They get those answers instantly without needing to browse through hundreds of links.

But don’t get me wrong — SEO isn’t only about appearing in answers.

Search engine optimization goes beyond placing keywords here and there. It’s about making your brand visible, building trust, and structuring your content properly.

But more on that later…

Let’s take a look at how the fundamentals of search are changing.

There’s a new term in the market called Answer Engine Optimization, and we’re about to explore what it means and how you can get cited by ChatGPT, Google Gemini, or Claude AI.

So without further ado, let’s begin.

Data Backed Insights

- Do you know 1 in 10 U.S. Internet users turn towards generative AI for answers instead of hitting Google Search?

- More than 400 million people are now attuned to ChatGPT to get useful answers.

- According to Google, AI Overviews now appear in more than 16% of all Google desktop searches within the United States.

These data backed insights are enough to provide clarity how the average mindset is shifting from searching up basic queries on Google and instead refer to ChatGPT for answers.

What is Answer Engine Optimization (AEO)?

Answer engine optimization (AEO) is the process where you optimize or write your online content in a way that it gets picked up by AI systems. Many AI systems deliver direct answers for people’s possible queries. However, when they are picking up these answers, they often do a background search exploring a number of website links. Based on the information they found off the Internet, they create answers. These answers are often shown in Google’s AI Overview, Voice Assistants, LLMs (such as ChatGPT and Perplexity).

In traditional SEO, your website content is optimized on a bunch of keywords so it gets picked up by Google. With AI appearing in the global markets and becoming a part of the Google Rankbrain algorithm, it’s now smart enough to understand the context behind user search. However, it still has to provide answers in the form of article links which are very detailed.

ChatGPT and other LLMs took this burden of finding answers off the shoulder by contextually citing and directly answering through AI search. However, they required a few things; the correct searcher’s intent, content to be clear, well-structured, and coming from a trustworthy source.

AEO vs Traditional SEO – How They Differ From Each Other?

So here’s the thing, AEO doesn’t change the way searches happen.

The age old adage where people are actually freaking out that AEO will replace SEO is just a myth.

AEO will never replace SEO but instead it will complement it.

See, AEO and SEO both have the same goals. Their goal is to make the best information discoverable. However, they both different in their approach to reaching people.

As is the case with SEO, it’s all about ranking your content on specified keywords on relevant searches. It strongly depends on ranking pages within the organic search results.

But with AEO, it’s more about citing or mentioning AI answers.

It improves the process where the search algorithm shows 10s of links on Google SERPs. You only get a handful of correctly cited links and a concise well structured answer for your specific query.

Are Companies Capitalizing on LLM Visibility?

Companies which are smart are already capitalizing on this particular shift.

Many of the clients coming to Branex are now seeking AEO visibility or LLM visibility. We are significantly seeing a month-over-month growth in such a number of clients.

They now measure success through metrics like Google AI Overview appearance or other GPT citations. Meanwhile, brands who are focused on traditional practices are sort of evaporating.

But still, we don’t want you to feel wrong here…

AEO isn’t a departure from SEO. It’s the next chapter in how search engine optimization will change. Answer engine optimization backs SEO fundamentals, but it also favors audience intelligence & cross-channel optimization across websites. It favors structure over strategic keyword placement. It favors brand reputation, social presence and other things from which AI engines work.

How Do Answer Engines Work?

Now, before we move deeper into understanding the possible outcomes we can receive from answer engine optimization, let’s first understand how the answer engine actually works.

The answer engine interprets the users’ question, and then instantly scans the entire internet for the best possible answer. It finds useful search intent, and explores the latest & relevant information.

It only pulls up data from trusted sources. Instead of showing an entire list of blue links like Google or Bing would, they go through these web links in the background and extract key points.

After doing its research, they formulate an answer in their own words. This direct and conversational answer relies on many useful signals such as clarity of content, structure, expertise & trustworthiness. And to cite the sources from which it collects information, it gives these small citations for users to track the articles from where these search engines have actually extracted the answer from.

So when you’re using ChatGPT or any other LLM, feel like you’re talking to a smart assistant.

Why Do LLM Answer Differently Everytime?

LLM follows a prospect called real-time retrieval.

It’s capable of providing fresh information by constantly running through a model called RAG. Retrieval Augmented Generation (RAG) is a technique which many LLMs employ to write-up correct answers every time. This technique ensures that a particular LLM only pulls up information which is the most relevant and comes from the latest sources. With RAG working at the backend, LLMs don’t just respond to particular user queries based on how its AI neural networked brain thinks & assesses, instead they refer to a specific set of documents if they believe that a certain prompt needs more freshness.

They may explore search information from places like Google in case of Gemini or Bing in case of ChatGPT or any other specific APIs or external databases that they have access to.

They may not have the license or rights to do it, but what they do is work like a smart reader going through these documents one-by-one finding the most relevant and up-to-date info.

This ultimately reduces the chance of AI hallucination, a concept that’s often circulated in LLM-ology.

What is LLM Hallucination?

LLM Hallucination, so what is it?

Well, just like the human mind can often go astray, so can an LLM’s working engine or complex brain algorithm. It can give you answers confidently, and they may appear logically correct, but they are factually incorrect. These made-up answers are not supported by any real data whatsoever.

It’s basically the model itself “filling the blanks” to make sense of the information. It misinterprets the context and predicts something that looks correct based on patterns grounded in facts.

This can eventually end up showing incorrect stats, fake citations, wrong names, invented events, or overly confident explanations which have no real evidence backing the information.

It happens because LLMs don’t “know” things from real-time, real-life human experiences.

They simply predict what the next word will most likely be based on trained data. Sometimes, these predictions are so flawless that even the human mind contemplates its creative fiction.

How LLM Hallucination Ties to Answer Engine Optimization?

LLM hallucination directly corresponds to Answer Engine Optimization because answer engines produce responses that are not only accurate but also reliable and grounded in trustworthy sources.

AI models tend to hallucinate when they don’t have clarity in information. They are pulling information from sources which are sort of well-structured and have a lot of backlinking done for authority.

So it’s like AI is trusting sources that have a powerful domain authority without actually cross-checking the information. It fails to read out the stats, check for correct references, or even decide which is factually correct. It does have access to “safe data,” but it only uses it to make things up.

However, with answer engines becoming more grounded by following citation and retrieval systems, they follow a methodology that prioritizes content which reduces the chances of LLM hallucination.

If your content answers the searcher’s intent directly and signals credibility, the AI system is more likely to pull your website into their results. This is only achievable if you improve your AEO game.

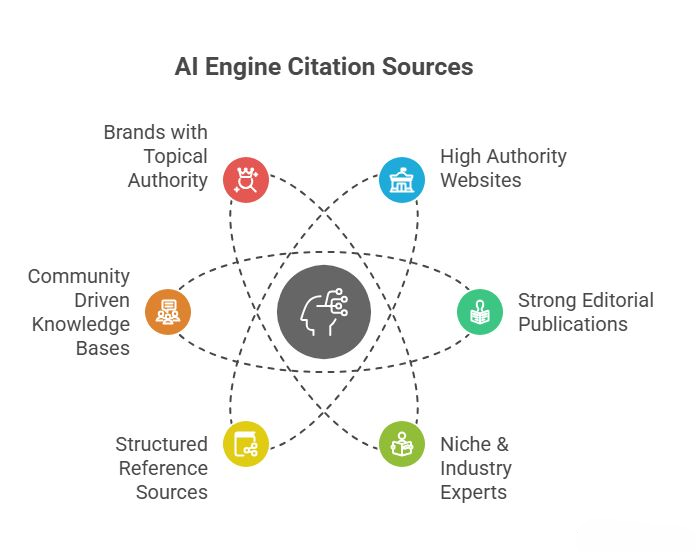

What Sources Do AI Engines Cite Commonly?

As discussed above, there are a few things that Answer Engines like ChatGPT or Gemini merely focus on when citing relevant information to pull up in their respective LLM searches. These are:

High Authority Websites – These are a list of useful websites such as government webs, major organization webs, university edu links, well established industry leaders and so on.

Strong Editorial Publications – This include mainly reputable media outlets, research-backed blogs, & sites with a solid editorial standard (such as clear authorship, citations & updates).

Niche & Industry Experts – This include websites which specialize on a particular topic or published detail, accurate guides, or content which answer engines simply love.

Structured Reference Sources – These are some web presences which are gold for AI systems like:

– Wikipedia

– Wikidata

– Public datasets

– Manuals, documentation libraries

– Product specifications

Community Driven Knowledge Bases – There many knowledge bases where discussions take place with complete transparency or clear version history such as Stack Overflow, Github, and more.

Brands with Topical Authority – The website which ranks well for related keywords.

Why Certain Content Types are Likely to Be Cited by AEO?

So when it comes to content, what usually gets cited by answer engines are content types which offer clarity, structure and trust signals. When information is well-organized and written in a direct question-and-answer style, and the information is backed by credible sources, it becomes relatively easier for AI models to extract and verify. This way, content shows a strong topical authority because it heavily relies on structured data. Also, a person who keeps checking whether the information is factually up-to-date or not often gives answer engines a strong signal that this website is not unambiguous. In short, the more your content eliminates confusion, the more likely AI search systems will use it.

Tips and Tricks for AEO to Increase Search Visibility in LLMs

If you’re planning to write in a way that your content gets cited by LLMs, the good news is… you don’t need to become a huge authority on the Internet.

All you have to do is follow these essential tips and tricks to increase your chance to appear in LLM searches, and eventually show up in AI searches.

Write in a Conversational Way

You want your stuff to be cited by AI, you need to be conversational as you would be with any Tom, Dick and Harry in reality. Think how you will react if someone asks you: “Hey, where can I buy the best coffee in town?” Will you beat around the bush by telling them the benefits of coffee or will you tell them what coffee is and why it’s important to consume coffee everyday? Well, no! You will simply answer the question by telling them where they can find the best coffee in town and if you further want to assist, you can suggest a few good cafes nearby. It’s the same with getting cited on LLMs.

If you want your content to get picked up by LLM models, you have to be pretty straightforward with your content. When covering your articles, web page, or any other online form of content, just design your answers in simple sentences. No need to add too much fluff in intros or write a gazillion words for simple queries written as blog posts. Both AIs and users want direct answers; they want digestible content so your content should complement a similar format and voila, you will get your chance.

Follow an LLM Friendly Formatting

So I went out of the way to do some exploration on what gets easily cited in AI Overviews and found that most AI-generated answers have structured or unstructured lists, which makes sense. Many AI overviews & answers generated by LLMs often display large amounts of data in the form of clear information and almost all responses have some form of bulleted info, present. This is essential because you want content to follow suit. If you’re writing content to get cited on LLMs, do a friendly formatting.

Write your content by breaking it down into lists, bullet points, tables, and other organized formats. In this way, your content will become much more easily scannable and possible to get picked up on.

A few best practices you can follow are:

- Write short sentences, like aim to write under 20 words. Focus on declarative statements to become more authoritative and provide direct answers to almost all of the basic queries you include in blogs.

- Avoid vague headlines, because seldom AI and traditional searches value unclear information. Understand the context behind your content and then create a headline that perfectly summarizes.

- Don’t forget, semantic cues are your best friends. You can start with sentences with phrasing like, “According to,” “the most important,” or “by comparison” when and where it’s important.

These writing tricks will assist AI models to understand the purpose of your writing much more clearly.

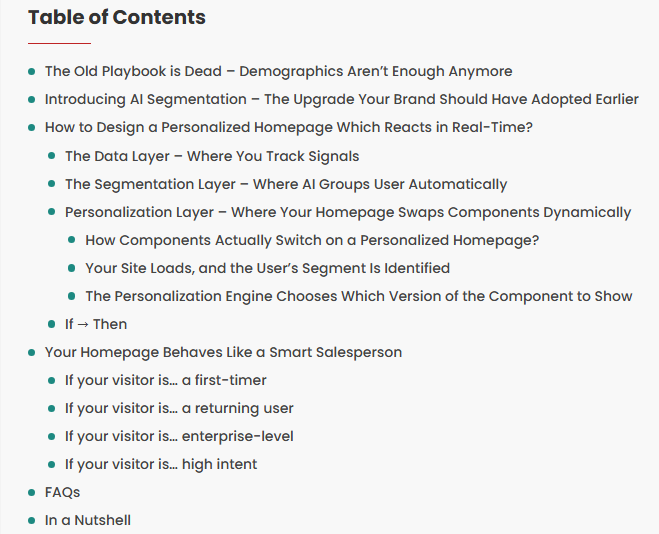

Use Clear & Descriptive Sub-Headings

Remember, if you want to get your content cited on Google AI Overview or by other LLM engines, craft your headings in a way that it speaks to your target audience. Make sure you break the content into organized H2s and H3s. According to one of our internal research, we found that AI overviews pick pages better with subheadings which follow a clear navigational structure. It means clearer the structure of pages and web content, the more scannable they will be. And, there will be a higher chance for answer engines to cite your article! So make sure to keep all your headings & subheadings crystal.

There’s an interesting trick I learned called the nesting heading.

When you’re nesting headlines, make sure to divide your main topic into topics & sub topics. This way, you are more able to organize them into primary, secondary and tertiary heading structure.

H1s will become the main highlight, whereas H2s will present subtopics and H3s will follow suit. After all, you want AIs to summarize the bulk of your content by just looking at the headings, now don’t you?

Here’s an example where I organized my content in an appropriate hierarchical nest:

Define Concepts Explicitly

Imagine sitting at a coffee shop with your best friend and they suddenly ask you, “Man! I am not so sure why my car often backfires, I am not that familiar with the inner workings of the car engine.”

What should be your response (especially, if you’re an authority in car engines)?

Well, you will first want to clear your friend’s concept.

Same is with the LLM engines, you can think of them as your friend searching for the best conceptual explanation for its query. When it comes to content for LLM, you have to be sound minded.

Every section that you cover in your article, ensure you clearly define the key phrases. Of course, you will be discussing them in depth and detail in the future, but in the beginning, give a clear overview.

Recently, I wrote a blog post on: How to Use Reddit for SEO in 2025? And as it happens to be, it got cited by AI Overview and other LLM search engines. Why? Because I defined the concept.

When writing, never assume your readers are already familiar with what you have to share… and so goes for LLM engines. Because, when you’re providing clarity and working on reader’s concept building, you’re actually giving search engines extra context behind your content’s main topics.

For example, if “Reddit SEO” was the primary term, I saw secondary opportunities like “genuine feedback & interactions,” “genuinity of user engagement” and “Google’s EEAT principles” which all correlate.

FAQs Can Be a Game-Changer

Do you know FAQs can be an exact match with queries that LLM searches often pick up?

There are entire LLM based models such as Perplexity AI which heavily relies on query based searches.

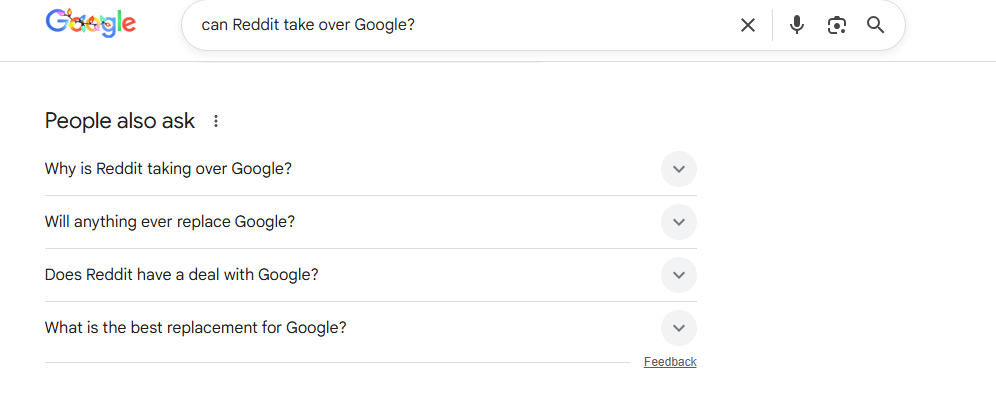

Just searched up a very basic query: “Can Reddit take over Google?” and this is what Google picked up.

Do you see how many FAQs are covered in the “People Also Ask” section.

Therefore, when you’re writing for AI overview or LLM search engines cover a maximum number of queries so your content gets cited. You can think of all the possible questions anyone can ask you when discussing the topic which you’ve covered. Just merge them all up in a well organized FAQ section.

What Are Common AEO Challenges?

Getting your answers cited in searches may not be that difficult overall. All you have to do is stick to the suggestions above, and voila! You will easily nail exposure among most Answer engines.

AEO isn’t fully decoded yet

For now, Answer Engines are still in its infancy compared to billions of users who are using search engines for daily query searches. There’s approximately 400 million individuals around the world who are currently familiar with ChatGPT and are actively using it. Besides, no one can be 100% confirmed AEO can actually provide the best optimization methods guaranteeing positive end results. You may go through trial and error until you exactly see what’s working for you and what’s not. Still, when you follow a user-friendly content strategy, it satisfies user intent and provides direct answers.

AEO taps into various ecosystems

In SEO, you normally focus on a particular keyword, and optimize your website content or blog content to ensure it starts coming up on the searches. However, when we talk about Answer Engine Optimization, the process goes much deeper than simply optimizing it to rank on Google or Bing. Answer Engine Optimization is a whole lot complex as you have to focus on Google AIO, Bing Copilot, ChatGPT, Perplexity and so on. If you want to improve your website visibility on AEO, you will exclude factors such as featured snippets and voice queries. All platforms differ from each other in one way or the other. Finding what works for each can be a bit tricky, considering a methodical approach.

Tracking AEO performance isn’t straightforward

When it comes to AEO, tracking performance can be a little tricky. Since, there are multiple platforms for which you will be optimizing your content, and only a few dedicated tools to measure performance, you will face a roadblock specifically compared to SEO. In search engine optimization, you only leverage Google Search Console Dashboard which doesn’t filter AI traffic from traditional sources. But, there are a few other third-party tools such as Ahrefs, SEMRush, and Surfer SEO which work as dedicated AI trackers. They help prevent scope creep & contribute to a more focused overall strategy.

Concluding Thoughts

Answer Engine Optimization isn’t just a new concept that will die out, it’s the evolution of how content reaches people in an AI-driven world.

While SEO remains essential for visibility, AEO takes a step further in giving you content which is structured, credible and easily digestible for AI engines.

By focusing on clear answers, conversational writing, strong subheadings, and trusted sources, you increase your chances of being cited across multiple AI platforms like ChatGPT, Google Gemini, and Perplexity. The road to AEO success may have its challenges, but the opportunity is clear: brands that embrace this shift now will be the ones seen, trusted, and cited in 2026 and beyond.

In the end, it’s not about replacing SEO, it’s about amplifying your content’s reach in a world looking for answers; not just links, but real original answers for their burning questions.